DerScanner > Blog > How to integrate a static analyzer into the development process

How to integrate a static analyzer into the development process

This post will be useful if you are about to choose or implement such a solution. How to set up a process in such a way that code vulnerabilities are not only detected, but also fixed? In this post, I’ll try to help you with this challenge.

What is ahead?

If your ultimate goal is guaranteed vulnerability fixing, implementing an analyzer will take time, from a couple of weeks to several months in my experience. In the case of a large company, be ready for a lot of approvals, since there are hundreds of code bases to be analyzed and dozens of teams with their own development practices.

To add a new development stage, i.e. static analysis, you will have to study how each of the teams works and propose a solution that is convenient for everyone. Small companies may even lack any established development procedure. Therefore, analyzer implementation can be a good time to address this shortcoming as well. For smoother integration, we strongly recommend learning about your analyzer’s integration capabilities.

What integration capabilities may be available?

As a rule, an analyzer has a non-graphic interface (CLI, REST) to be integrated in any process. Some analyzers feature ready-to-use integrations with build tools and development environments, version control systems (Git, SVN), CI/CD servers (Jenkins, TeamCity, TFS), project management systems (Jira), and user management systems (Active Directory). The more useful an analyzer is for your company, the easier its integration will be.

How to set up a process?

Here is a standard case involving information security, release management, and development functions. Usually, an analyzer implementation is initiated by an information security team. The problem here is to agree on who will do what and when. We divide the process into the following steps: run analysis, handle analysis results, create a vulnerability fixing request, fix the vulnerability, and check fixing results. Let us look at each step a bit closer.

Step 1. Run analysis

Analysis can be performed either manually or automatically. Each method has its own pros and cons.

Manually

Run an analyzer, upload a code, and view results – all by yourself.

While a security officer may be able to cope with a dozen or so projects at any one time, automation is required to manage hundreds of projects.

Automatically

Decide how often and at what time you need to analyze a code, for example, before transferring it to production, on schedule (once a week) or after each change.

If an app is updated rarely, but requires security analysis, then configure to start analysis after each change.

If many people work on a code, there are many codelines, or changes are merged frequently, then it is better to analyze the code often but not interfere with the development process. For example, analysis can be performed when a request for merging with a mainline is made or when a task status on a respective codeline changes. In this case, integration with a version or project management system will be helpful. In addition, CI/CD integration will allow you to include analysis as one of build steps.

Set up a schedule so that you have enough time to handle the results.

Step 2. Handle analysis results

Rather than make developers eliminate all detected vulnerabilities at once, first let a security officer verify them. The results of the first analysis may scare you: dozens of critical and hundreds of not-so-critical vulnerabilities, and thousands of false positives. What can you do? We recommend fixing vulnerabilities gradually. Your ultimate goal is to fix vulnerabilities in both old and new codes. Start with verifying critical (since they undermine security) and new (since the new code is easier to correct) vulnerabilities.

Use filters to sort vulnerabilities by severity, language, file, and directory. More advanced tools will show only new code vulnerabilities, while hiding those in third-party libraries. What if you have used filters but many vulnerabilities still remain?

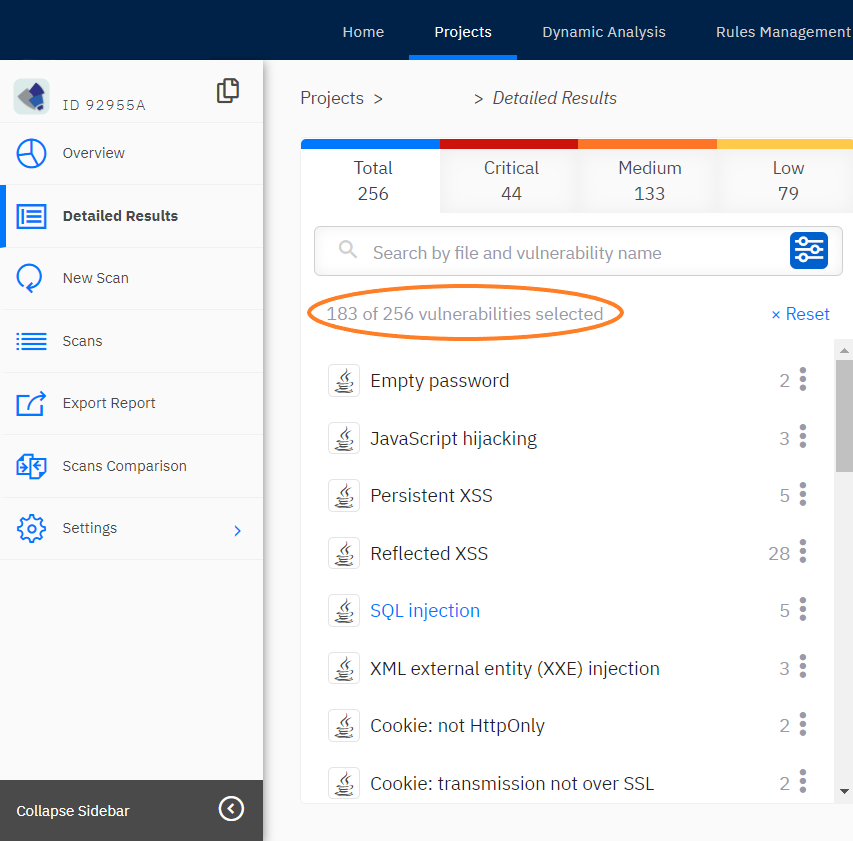

Look at the most probable vulnerabilities and use analyzers that assess false positive probability. If there are many vulnerabilities with almost identical severity, then filter the results using this metric. In this way, you will process the most probable vulnerabilities faster (see screenshot below).

Use verification statuses. To freeze verification results, analyzers usually suggest you either confirm a vulnerability or reject it as a false positive. As a rule, these results are left intact during rescanning.

Step 3. Create a vulnerability fixing request

If a developer analyzes their code and immediately fixes vulnerabilities on their own, then no requests are required.

However, if results are reviewed and verified by a security officer, then a task should be created to correct confirmed vulnerabilities. From our experience, this is the step when difficulties arise.

Request (sample form)

In some companies, large (stage-size) tasks are documented in a project management system, such as Jira, while subtasks and bug fixing are managed via GitLab Issues, which is accessible to only a team lead and their team. Sometimes the build is impossible to make without creating a separate task in a project management system. An analyzer may have integrations that help you create necessary requests just in the interface.

This is a common case when a company employs multiple development teams, each with their own working procedure, and you have to make everyone happy! Such a case raises some questions:

· Should I create a separate project for vulnerability fixing or create tasks in existing ones?

· Is vulnerability a known bug or something new?

· Should I create a separate task for each vulnerability or group similar ones?

· What to provide as a task description: link to a vulnerability in the analyzer interface or to a code fragment accompanied by a brief problem description?

· And, finally, does a developer need access to results, or just a simple PDF report (with a wording like “see page 257”)?

Of course, it depends on how team work is organized and what error tracking systems are used.

If a code is being written by contractors with minimum access to internal systems, then a PDF report is quite common. Usually, you can include vulnerabilities that are of concern in a report and send it to the desired address from the analyzer interface right away.

However, if a company prevents any steps being taken without creating a project for a certain task type and allocating certain resources, then look for a tool that can be integrated with your system. In the case of the most flexible integration, the new tool will generate a task description (including a link to a source code in a version control system) and allow you to complete all required fields, and choose a task type, as well as specify the parent task, responsible persons and even the release version.

Time and effort

If a team usually assesses efforts for each task, then vulnerability fixing should be no exception. Simultaneously, developers can verify vulnerabilities. This step sometimes shows that a vulnerability is a false positive or cannot be exploited.

Even then, you may still lack sufficient resources to fix all vulnerabilities at once and will thus need to set and agree on their priorities.

Well, we’ve finally determined the required effort to perform each task and its priority. Now, it’s time for those responsible for release dates (project manager, team lead, etc.) to decide what to fix and when. When just starting to use an analyzer, do not postpone a release, even if not all vulnerabilities are fixed.

Step 4. Fix vulnerabilities

How to allocate resources correctly – engage new people or charge those already involved in development – depending on your budget. The truth is: if a company pays for an analyzer installation, it should also prepare itself for vulnerability fixing expenses. One option here is to treat vulnerability fixing as a technical debt and allocate a certain percentage of time.

There are two scenarios: correct during development or upon a request from a security officer. During the development process is ideal as this allows a vulnerability to be fixed right after detection. A developer scans the feature codeline before requesting a merger with a mainline. This can also be automated through integration with a development environment, build tools, version control system or CI/CD.

Another scenario is to analyze a mainline after all changes. In this case, a security officer or a team lead reviews the results and creates vulnerability fixing tasks, which requires more effort. Source information for a developer includes a source code, vulnerability fixing task, and analysis results. From a developer’s point of view, vulnerability fixing is little different from bug fixing.

Step 5. Check fixing results

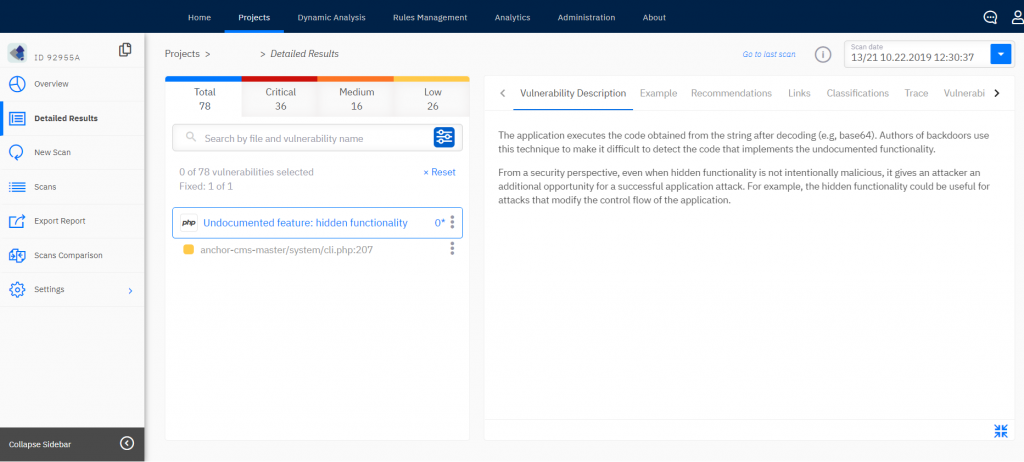

It’s important to make sure that the vulnerability has been really fixed and not replaced with a new one. You have analysis results of a fixed code. What to search for? A file and line number, where a vulnerability was found? Or the vulnerability name? Both options are OK. However, if a code was changed, the line with the vulnerability may have shifted, and there may still be many occurrences of the same vulnerability. Some analyzers can show “fixed” vulnerabilities, thus allowing you to check the vulnerability fix faster (see screenshot).

Examples

Here are two possible scenarios:

Scenario 1

A team uses Git for version control and Jira for project and task management. Jenkins is used for build scheduling, for example, once a day if there were changes in a codeline. A “run analysis” instruction is specified as an additional step.

A security officer reviews analysis results of a mainline (master or development), with developers doing the same for their feature codelines.

Developers fix vulnerabilities, if required. If an analyzer can filter vulnerabilities by a request (show new vulnerabilities only, hide vulnerabilities in third-party libraries, show vulnerabilities in a specific directory or file), then a developer can quickly view relevant results, thus increasing fixing probability.

A security officer views mainline analysis results. Thanks to Jira integration, a security officer creates vulnerability fixing tasks just from the analyzer interface with further approval of timeframes and priorities.

When a vulnerability is fixed and a new code passes through all internal development stages (team lead review, user and regression testing), a security officer must make sure that the vulnerability no longer exists. The relevant author can then close the performed task.

Scenario 2

A code for a company is developed by a group of contractors. A team lead interacts with developers via email and uses GitLab for version control. Contractors have no access to internal project management systems (Jira).

Either a security officer or a team lead reviews the mainline analysis results. Contractors have no access to an analyzer. If there are vulnerabilities that need to be fixed, a security officer sends a PDF report with analysis results to a team lead or directly to a developer.

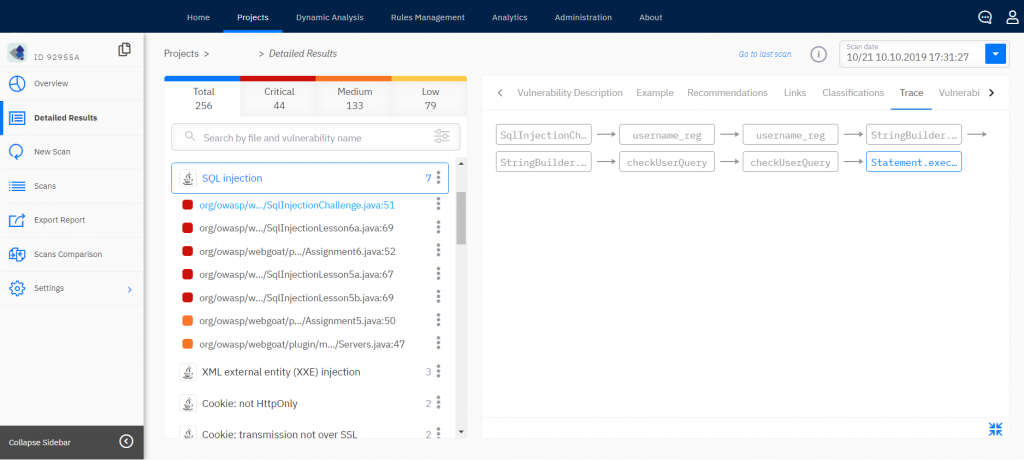

From this report, the developer learns about vulnerability location, nature, and fixing options. Ideally, for vulnerabilities related to insecure data flow (such as SQL injection), the report should provide a diagram of propagation, from a data source to where the data is used in a function/method call (see screenshot).

After the vulnerability is fixed, a team lead informs a security officer that it is time to rescan and check results again.

Other important matters

Companies should foster effective interaction between information security and development teams to ensure both are truly interested in fixing vulnerabilities. Combining formal requests with networking is a good idea here.

It is important to define user roles, with good analyzers supporting role-based access. For example, a contractor is unlikely to require admin rights or access to internal system analysis results. The right to edit results (change severity and status) should be given to a security officer and a team lead only. Use POLP - Principle Of Least Privilege - as your best practice.

If a company is large and employs a user management system (such as Active Directory), then consider tools with ready-to-use integration. You will be able to use roles and groups already established at the company and assign necessary rights to them.

Conclusion

After choosing an analyzer, remember that its correct implementation is no less important. Do not be afraid of meetings and discussions with other people: the better you understand the process, the more actionable results you will get. The key point about workflow is to not just approve it, but ensure continued adherence.

Share with:

You will definitely like these articles

Static Analysis: How to Do It Right?

2020-04-06

All the Truth about Static Analysis

2020-04-20